Table of Contents

-

- What is ReadState

- Why Performance Matters (Even If It Wasn't Obvious at First)

- What Discord Built with Go :- And What They Tried Before Switching

- What If They Didn’t Switch? Could Go Have Worked?

- Go Has Improved ; But I Still Support Discord’s Switch

- How Rust Actually Saved It

- [Final Thoughts – Why Every Real Engineer Should Try Rust]

I’ve tried both Go and Rust, and right now I’m focusing more seriously on learning Rust. While exploring the language and its ecosystem, I came across an interesting story about Discord rewriting one of their backend services in Rust after having some issues with Go.

At first, I thought it was just another “Rust is faster” headline. But after reading Discord’s engineering blog and following some discussions, I realized there was more to it than just speed.

This blog isn’t about hyping Rust or criticizing Go. I just want to share what really happened, why it mattered, and what Discord tried before making the switch. I’ll also talk about what they could have done differently and why I still believe choosing Rust was the right decision.

If you’re curious about backend performance, deciding between Go and Rust, or just interested in real-world stories, I hope this gives you a balanced and useful perspective. It’s a bit technical, not really for beginners, but feel free to take what you want from it.

If you hate reading long posts, I get it , feel free to skim or skip. But if you’re into real-world tech stories and want to understand what actually happens behind the scenes, stick around. There’s some good stuff here for anyone curious about how big systems really work.

What is ReadState

To understand Discord's decision, we first need to understand what this “Read State” thing actually is.

Whenever you open Discord and look at your channels, the app needs to know which messages you’ve already seen and which ones are new. That’s exactly what the Read State does. It keeps track of every message you've read in every channel, across every server you're in.

Behind the scenes, there's a service that handles this for every user, every channel, and every message. It stores small bits of info like how many unread messages or @mentions you have in a specific channel. So every time you read a message, open a channel, or get a mention, the Read State updates.

What seemed like a small feature at first quickly became a serious challenge. As Discord grew to over 250 million users, the Read State system had to handle millions of updates every second. Even small delays, just a few milliseconds, started to matter. They made the app feel slow or out of sync. At that scale, performance problems don’t just appear in logs or tests, they affect real users, even if it’s not always easy to notice.

Why Performance Matters (Even If It Wasn't Obvious at First)

At first, the Read State service worked just fine. It was fast most of the time, and no one really noticed any major problems. But the issue wasn’t in the average performance, it was in the spikes.

As Discord grew, more users meant more messages, more read tracking, and a much bigger load on the system. Even a tiny delay started to matter. The Read State service is used on every message send, every read, every channel open, and every reconnect. It’s always running in the background.

The team noticed that every few minutes (actually 2 minutes), latency would randomly spike. These spikes weren’t huge all the time, but they were enough to make Discord feel slower and less responsive. Imagine opening a channel and seeing your messages load slower than usual, even for a second. That’s a bad experience.

In high-scale systems, consistency is just as important as speed. If your service is fast 95% of the time but lags 5% of the time, users still feel that lag. And in Discord’s case, those random pauses were caused by something deeper in Go.

What Discord Built with Go :- And What They Tried Before Switching

Before jumping to Rust, Discord built the Read State service in Go. The system had a smart design. To keep things fast, they used an in-memory LRU cache that stored millions of Read States — one for each user-channel pair. If the data wasn’t in the cache, it would be loaded from Cassandra, a high-performance database.

This cache handled hundreds of thousands of updates per second, and Discord used it to track things like unread messages or @mentions. To avoid hitting the database constantly, they only wrote back to Cassandra when:

- A Read State was evicted from the cache

- Or a timer (like 30 seconds after the last update) expired

So far, so good. But here's the problem: every few minutes, the system would experience sudden latency spikes and CPU jumps.

After a deep dive, the Discord team discovered that the spikes weren’t caused by inefficient code or bad architecture. They were caused by Go’s garbage collector (GC).

By design, Go's GC runs periodically to clean up unused memory. Even if you don't allocate much, Go will still force a GC cycle every 2 minutes. And during that cycle, the garbage collector has to scan large structures — like the LRU cache — to figure out what memory can be freed. That scanning causes CPU usage to spike and introduces delays in request handling.

What they tried without switching languages:

1 . Tuning GC settings live

They exposed a dynamic endpoint that allowed them to tweak garbage collector parameters on the fly. This gave them more control, but still couldn’t eliminate the root problem.

2. Shrinking the cache to reduce GC workload

By making the cache smaller, the garbage collector had less memory to scan. This actually reduced the latency spikes. But it came at a serious cost: more cache misses. That meant more frequent reads from Cassandra, slower response times, and added pressure on the database.

3. Partitioning the cache into smaller chunks

They split the cache into isolated segments so each GC cycle only had to scan a smaller portion of memory. This localized the impact of pauses and smoothed things out somewhat, but didn’t fully solve the issue.

4. Load testing different configurations

They experimented with various combinations of cache size, GC tuning, and partitioning strategies to find a stable middle ground. While it helped, it still wasn’t consistent enough for the kind of real-time performance they needed.

And yes, they improved things a lot , but never completely eliminated the latency spikes. The root issue remained: Go’s GC just wasn’t predictable enough for a service that needed microsecond consistency at scale.

At this point, the team was already using Rust successfully in other parts of Discord. So instead of fighting GC anymore, they decided to try rewriting the service in Rust and see what happened.

What If They Didn’t Switch? Could Go Have Worked?

Let’s be fair here. Go wasn’t doomed. There were other paths Discord could’ve taken to squeeze more performance out of the Go implementation.

For example:

1. They could have redesigned the cache structure to reduce GC pressure

By rethinking how the cache was organized in memory, they might have reduced the amount of work the garbage collector needed to do. But this would require significant architectural changes with no guarantee of eliminating GC spikes.

2. Used sync.Pool to reuse objects and avoid allocations

Go's sync.Pool allows object reuse and can help reduce memory churn. It minimizes the need for frequent allocations, which in turn lowers the GC load — but it doesn’t eliminate it entirely.

3. Shifted parts of the workload to C or Rust via cgo or FFI

Critical hot paths could have been offloaded to native code using cgo (C) or FFI (Rust). This reduces GC interaction but increases complexity and introduces new risks like memory safety bugs.

4. Introduced manual memory reuse patterns

While not idiomatic in Go, it’s possible to write memory reuse logic manually. This requires tight control over object lifetimes, which is error-prone and goes against Go’s simplicity philosophy.

5. Even offloaded hot-path sections to a separate process written in a lower-level language

Instead of mixing languages in a single binary, they could isolate performance-critical parts into separate services written in Rust or C. This avoids GC entirely for those paths but adds overhead in inter-process communication and deployment complexity.

These ideas might sound complex , because they are. That’s the thing. Getting the same level of performance as Rust would’ve meant adding complexity, breaking idioms, and investing tons of effort into tuning a garbage-collected language to behave like a systems language.

And remember , Discord wasn’t inexperienced with Go. They already tried the common tuning tricks. So yes, more could have been done. But at what cost?

It comes down to this: Why keep trying to push a language beyond what it’s designed to do when there’s a tool that fits better?

Rust didn’t need hacks or tuning to solve the core issue. The moment they switched, the spikes were gone.

So even though Go might have worked with enough effort, Rust worked right away — and that’s why they made the switch.

Go Has Improved ; But I Still Support Discord’s Switch

Let’s give credit where it’s due. The Go of today isn’t the same as the Go Discord used back in 2019. Since then, the language and runtime have improved a lot. Newer versions of Go have brought:

- Lower and more consistent garbage collection (GC) pause times

- Smarter allocation strategies

- Better diagnostics and profiling tools

- More mature community patterns for high-performance workloads

Features like sync.Pool allow you to reuse memory instead of constantly allocating new objects. And the GC itself is much better at minimizing impact in real-world applications.

So, could today’s Go handle something like Discord’s Read State service better? Probably. With enough work, tuning, and structure, a Go-based service could perform well — maybe even close to what Rust achieved.

But here’s the thing. That’s not the question Discord had to answer.

Their question was: Do we keep trying to force Go to meet our performance goals, or do we use a language that naturally avoids this problem?

Rust gave them:

- Predictable memory behavior with no GC

- Immediate performance wins with minimal optimization

- The ability to scale up cache size without trade-offs

- Cleaner, safer concurrent code due to its ownership model

Yes, Go could’ve worked. But Rust just fit better. The switch wasn’t about giving up — it was about picking the right tool for a high-performance job. Discord already had Rust experience, the service was small and self-contained, and the payoff was clear.

That’s why I support their decision — not because Go was bad, but because Rust made the problem disappear instead of needing to be tuned around it.

How Rust Actually Saved It

Go’s garbage collector wasn’t just freeing memory. It was walking through huge pointer graphs inside the LRU cache to figure out what could be safely collected. That traversal alone consumed CPU cycles, even when almost nothing needed to be cleaned up. And the real issue was that it happened on a timer whether the program needed it or not. This introduced random latency spikes that were impossible to fully eliminate.

Rust doesn’t have this problem because it doesn’t rely on a garbage collector. It uses ownership and lifetimes, enforced at compile time, to know exactly when memory should be freed. The moment an object is no longer used, it is dropped immediately and cleanly. No scanning, no pausing, no GC cycles.

When a cache entry is removed, the memory is freed right away. For example:

use std::collections::HashMap;

struct Cache {

store: HashMap<String, String>,

}

impl Cache {

fn evict(&mut self, key: &str) {

self.store.remove(key); // memory is dropped immediately

}

}

There is no runtime overhead for deallocation. Memory cleanup in Rust is deterministic. You know exactly when and where it happens. This makes it predictable at scale.

In Go, the cost of cleanup increases with the size and complexity of your data structures. In Rust, it stays constant. That meant Discord could increase their cache size without worrying about the garbage collector slowing them down.

And while this example uses a HashMap, the actual implementation used a BTreeMap for ordered storage and performance tuning. That deserves its own deep dive, which we’ll save for another post.

That’s the real advantage Rust gave them. Not just “no garbage collector,” but complete control over memory behavior with zero surprise costs. It’s not just fast. It’s predictable.

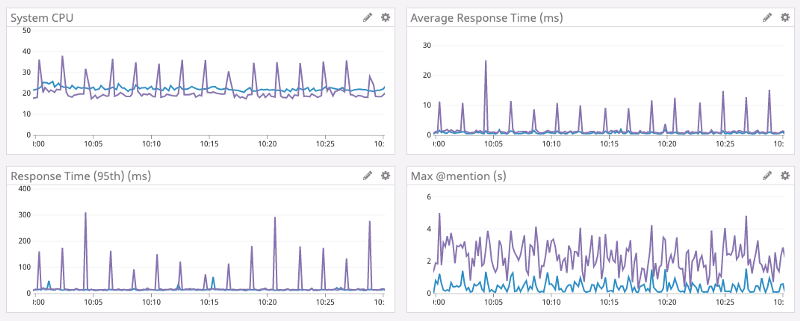

Purple is Go and Blue is Rust

Latency spikes and response time

Final Thoughts – Why Every Real Engineer Should Try Rust

I’ve explored several programming languages to be honest. I started with C++ and C for competitive programming and some low-level stuff. I liked them, but I never really wanted to use them for building real projects. Then I tried Python – and honestly, it’s the coolest language in many scenarios. I used JavaScript, PHP, Java, Kotlin, Dart… you name it. Mostly for specific use cases, class projects, or just curiosity.

Whenever I learn a language, I don’t just use it on the surface. I go deeper to understand how and why it works. Recently I’ve been learning Go to see what all the hype is about – and yeah, it deserves the praise. It’s simple, clean, and productive for backend work.

But no language reached me like Rust did.

For those who think Rust is only for low-level system stuff – no, bro. Rust has everything you’d want: powerful web frameworks like Axum , async runtimes like Tokio, amazing crates for almost anything, and a sweet community that actually cares about quality.

I’m not someone who recommends things easily. Even if something is good, I want people to research and decide for themselves. But this time, I’m really recommending Rust – not for everyone, but for real engineers. If you truly care about how your programs work, if you want control, safety, and performance at the same time, then give Rust a try.

I’ve never heard of anyone who actually learned Rust and hated it. I can’t guarantee you’ll switch your stack, but I’m 100% sure you’ll respect what it teaches you about programming.

Rust made me feel like the engineer I always wanted to be. And I think it might do the same for you.